Machine Learning¶

Introduction to Machine Learning¶

The Wild Dolphin Project: http://www.wilddolphinproject.org/

CHAT (Cetacean Hearing and Telemetry): http://www.wilddolphinproject.org/our-research/chat-research/

Challenge Question¶

Answer C

Decision Trees are understandable and easy to explain.

k-Nearest Neighbors¶

Normal Bite (O)

Cookie Cutter Bite (+)

Find the nearest example in the training data-set and apply that label.

Cross Validation¶

Cross Validation

Note: When applying k-fold cross-validation, you usually need to select the training/test samples on each iteration randomly. But in certain cases, such as for time-series or sequence data, random selection not be a valid approach.

Cross Validation Quiz¶

It is claimed that the number of pizza deliveries to the Pentagon was used to predict the start of the first Iraq War.

The Gaussian Distribution¶

Central Limit Theorem¶

Grasshoppers Vs Katydids¶

Naive Bayes Classifier with insect examples: http://www.cs.ucr.edu/~eamonn/CE/Bayesian%20Classification%20withInsect_examples.pdf

Gaussians Quiz¶

Decision Boundaries¶

Recognition Quiz¶

Decision Boundaries¶

Error¶

Bayes Classifier¶

Bayes Rule Quiz¶

Naive Bayes¶

Maximum Likelihood¶

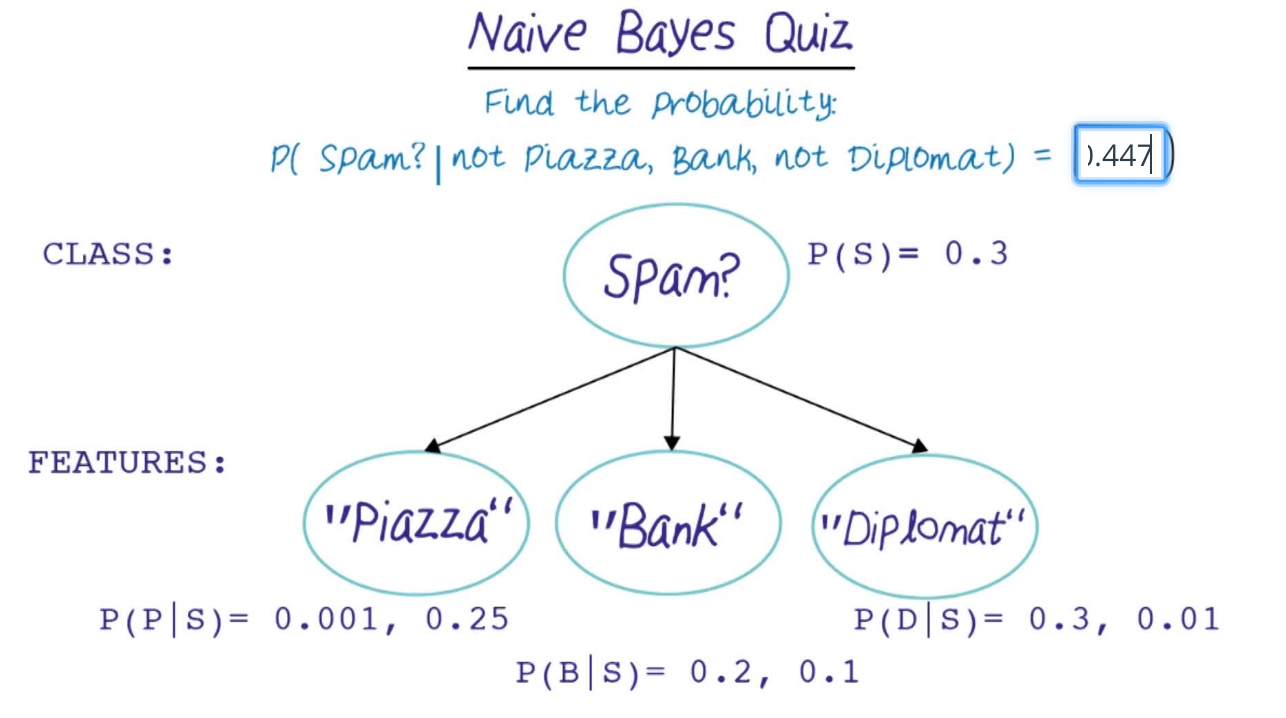

Naive Bayes Quiz¶

No Free Lunch¶

No Free Lunch Theorems for Optimization by David H. Wolpert and William G. Macready

Naive Bayes vs kNN¶

Using a Mixture of Gaussians¶

Kernel Density Estimation.

Cross-Validation to avoid Overfitting.

Generalizations¶

Decision Tree with Discrete Information¶

Decision Tree Quiz 1¶

Decision Trees with Continuos Information¶

Minimum Description Length¶

Entropy¶

We will use entropy to determine the decision tree branching.

Information Gain¶

then we can figure out the most important attributes.

We cna use the same attribute at multiple levels in the decisions trees.

Decision Tree Quiz 2¶

Random Forests¶

Boosting¶

Boosting Quiz¶

Neural Nets¶

Neural Nets Quiz¶

Quiz: Neural Nets Quiz

Fill in the truth table for NOR and find weights such that:

a = { true if w0 + i1 w1 + i2 w2 > 0, else false }

Truth table

Enter 1 for True, and 0 (or leave blank) for False in each cell.

All combinations of i1 and i2 must be specified.

Weights

Each weight must be a number between 0.0 and 1.0, accurate to one or two decimal places.

w1 and w2 are the input weights corresponding to i1 and i2 respectively.

w0 is the bias weight.

Activation function

Choose the simplest activation function that can be used to capture this relationship.

Multilayer Nets¶

Perceptron Learning¶

Expressiveness of Preceptron¶

Multilayer Perceptron¶

Back-Propagation¶

Deep Learning¶

Unsupervised Learning¶

k-Means and EM¶

Expectation Maximization

Pattern Recognition and Machine Learning by Christopher Bishop

EM and Mixture of Gaussians¶

Using GPS to Learn Significant Locations and Predict Movement Across Multiple Users, Daniel Ashbrook and Thad Starner