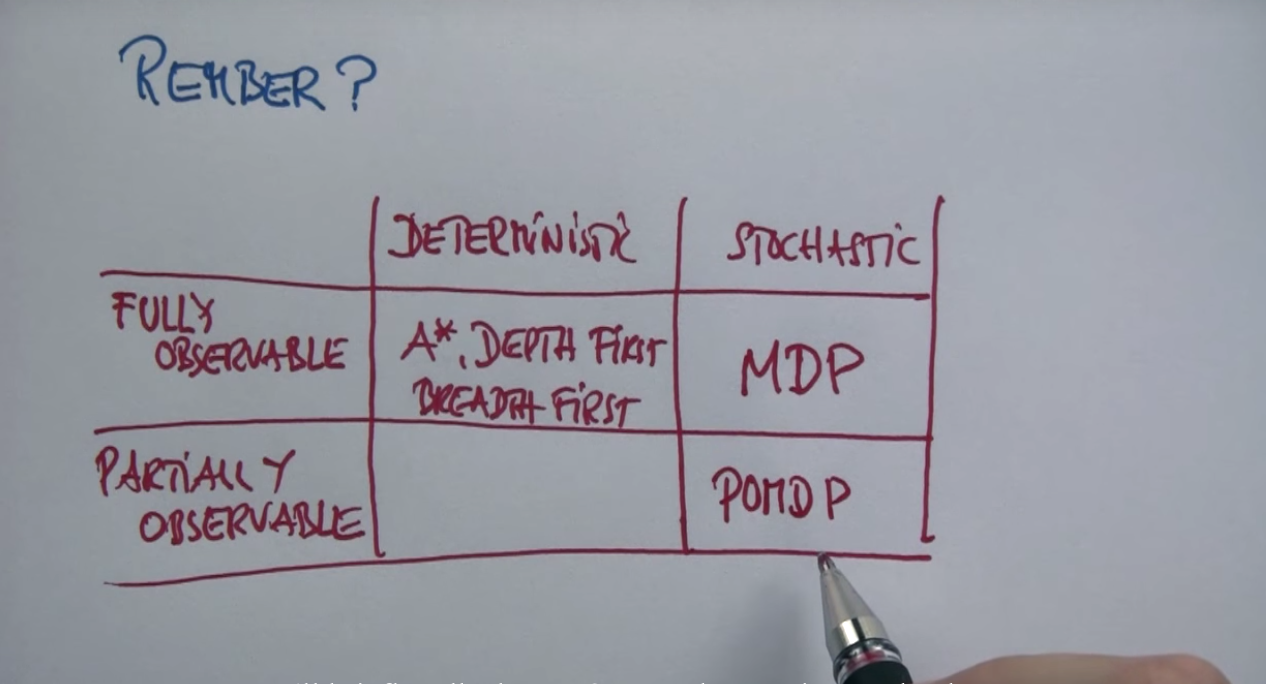

Planning under uncertainty¶

Introduction¶

Decisions under uncertainty.

Planning Under Uncertainty MDP¶

MDP = Markov Decision Processes POMDP = Partially Observable Markov Decision Processes

Robot Tour Guide Examples¶

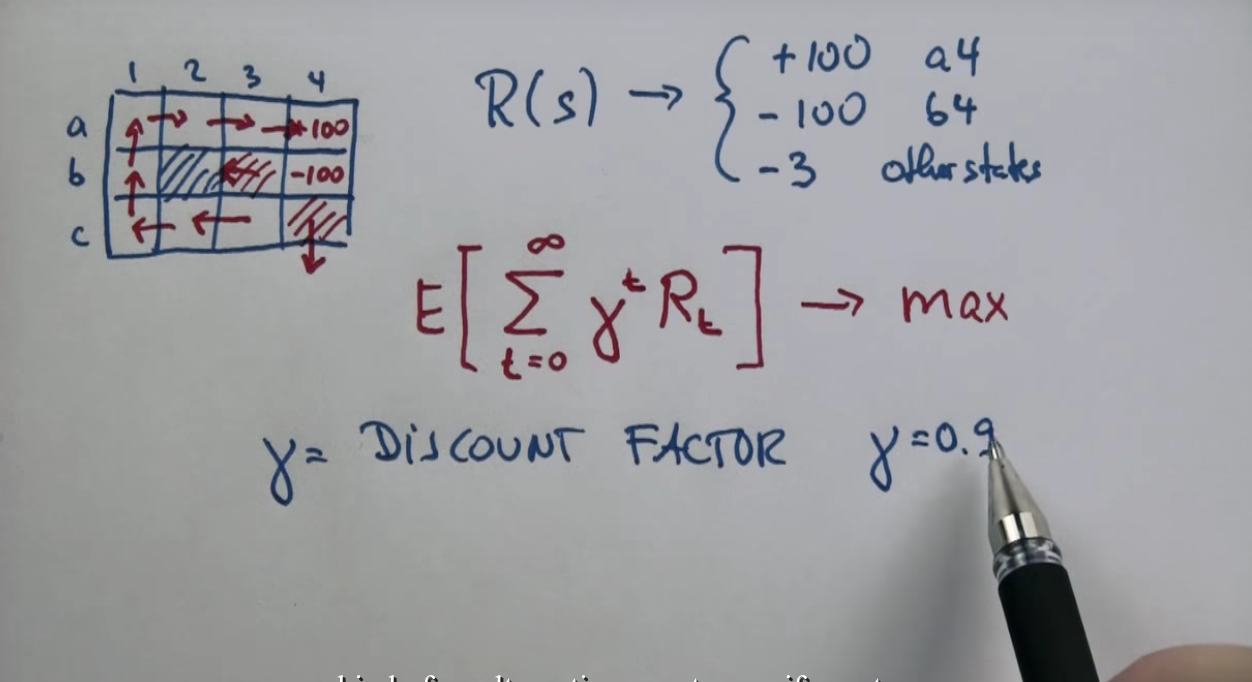

MDP Grid World¶

Problems with Conventional Planning 1¶

Branching Factor is too large¶

Quiz: Branching Factor Question

For this problem (and only this problem) assume actions are stochastic in a way that is different than described in 4. MDP Gridworld.

Instead of an action north possibly going east or west, an action north will possibly go northeast or northwest (i.e. to the diagonal squares).

Likewise for the other directions e.g. an action west will possibly go west, northwest or southwest (i.e. to the diagonals).

Problems With Conventional Planning 2¶

Quiz: Policy Question 1¶

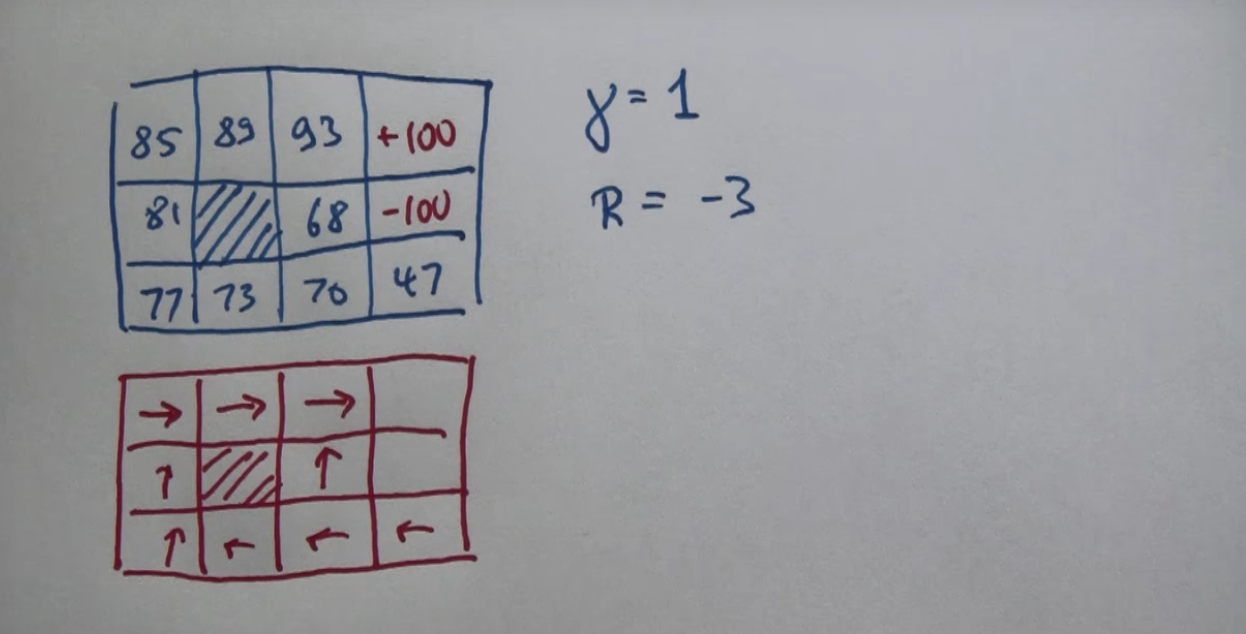

Stochastic actions are as in 4. MDP Grid World.

An action North moves North with 80% chance otherwise East with 10% chance or West with 10% chance. Likewise for the other directions.

Quiz: Policy Question 2¶

Stochastic actions are as in 4. MDP Grid World.

An action North moves North with 80% chance otherwise East with 10% chance or West with 10% chance. Likewise for the other directions.

Quiz: Policy Question 3¶

Stochastic actions are as in 4. MDP Grid World.

An action North moves North with 80% chance otherwise East with 10% chance or West with 10% chance. Likewise for the other directions.

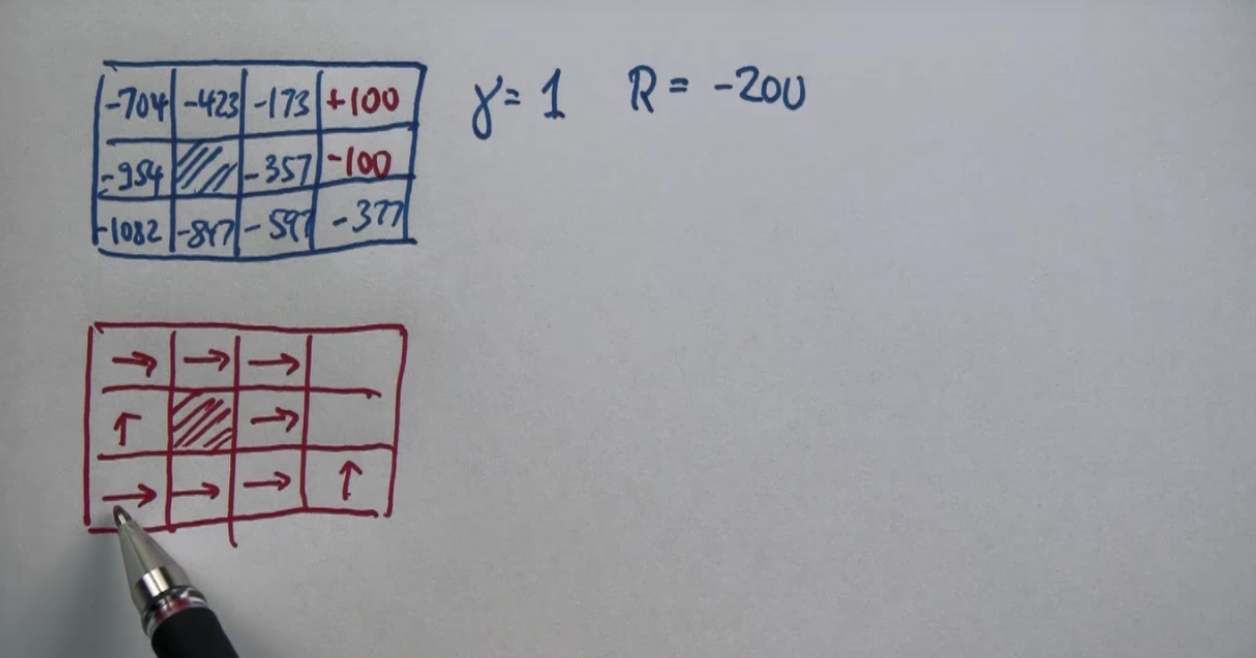

MDP And Costs¶

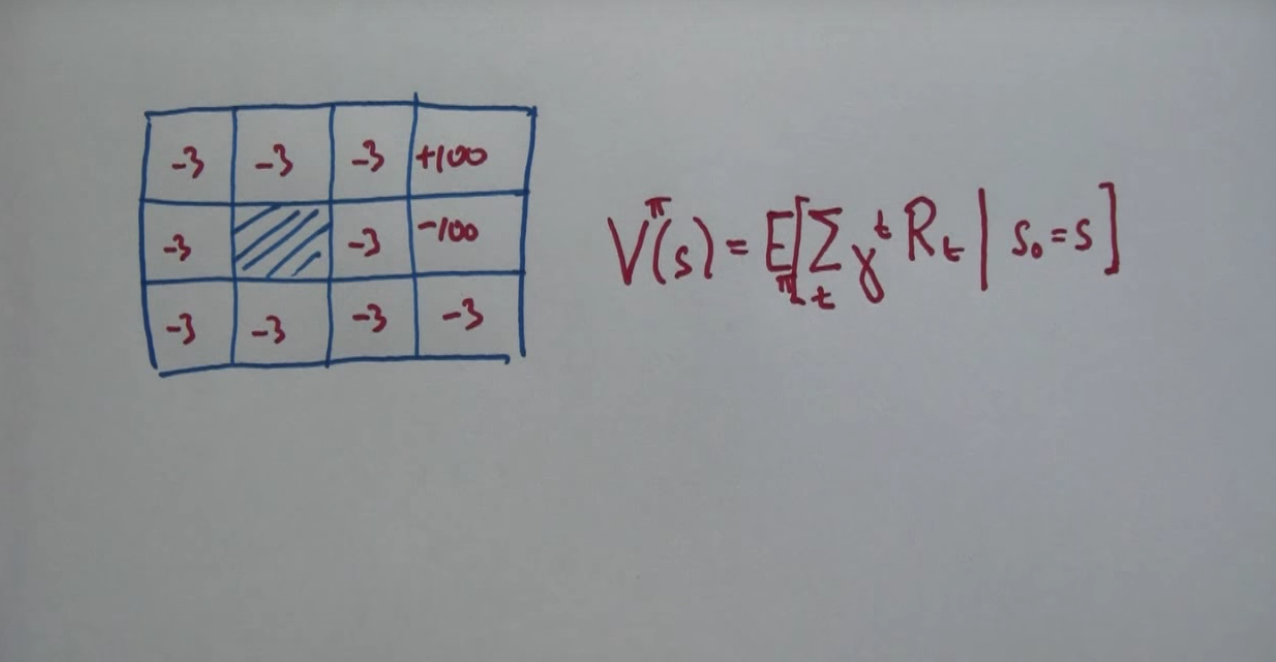

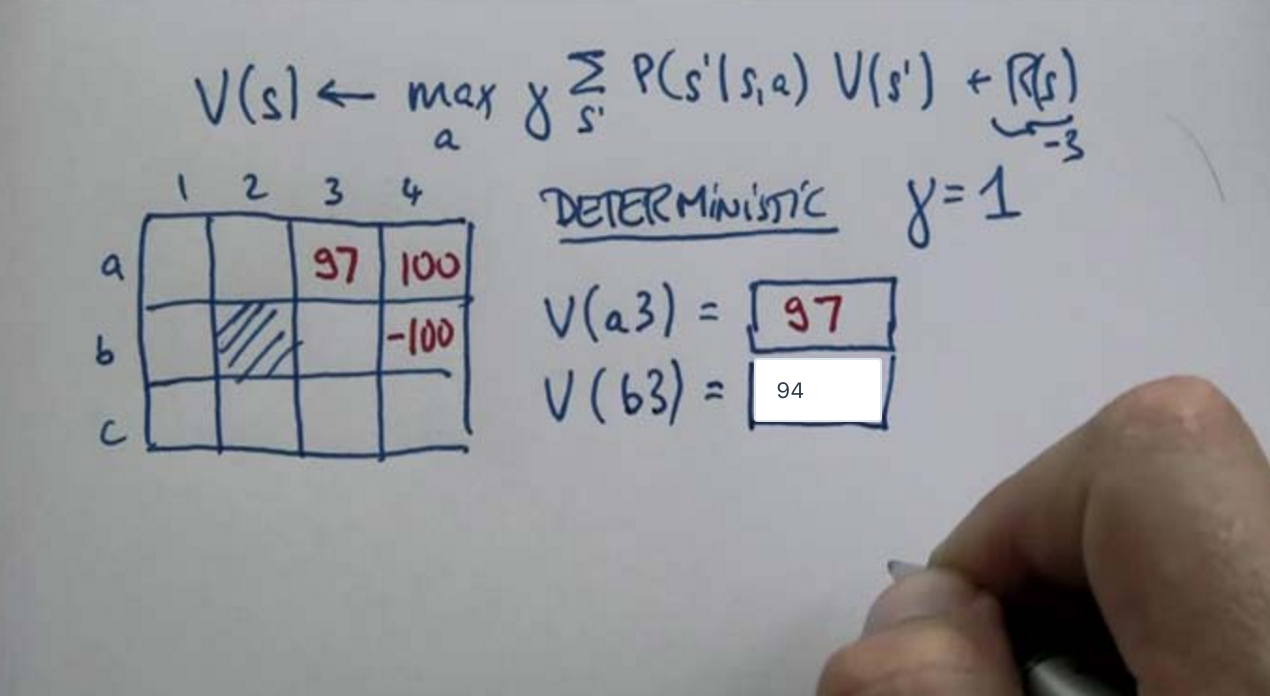

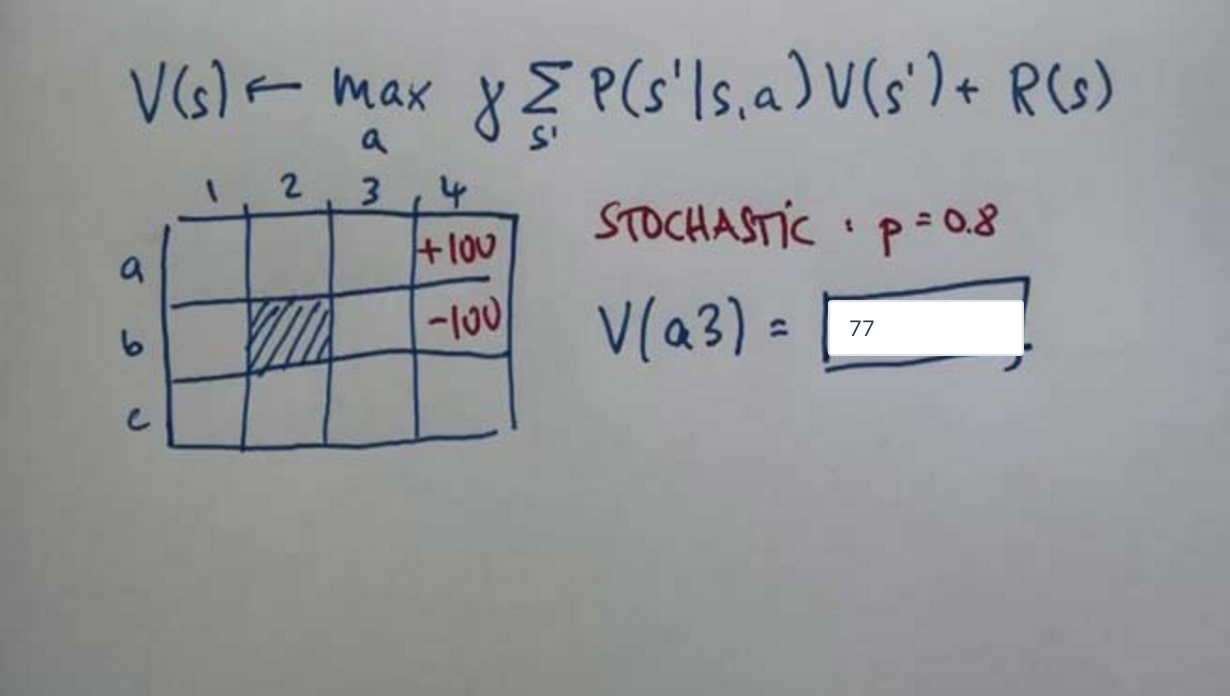

Value Iteration 1¶

Value Iteration 2¶

Value Iteration 3¶

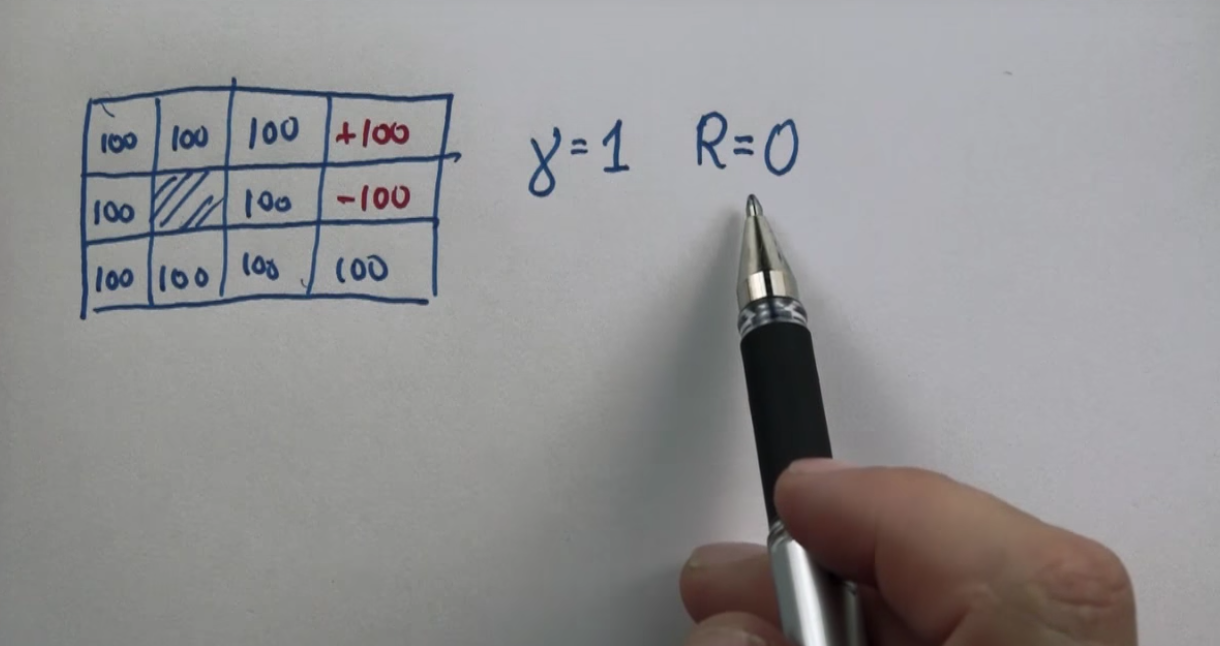

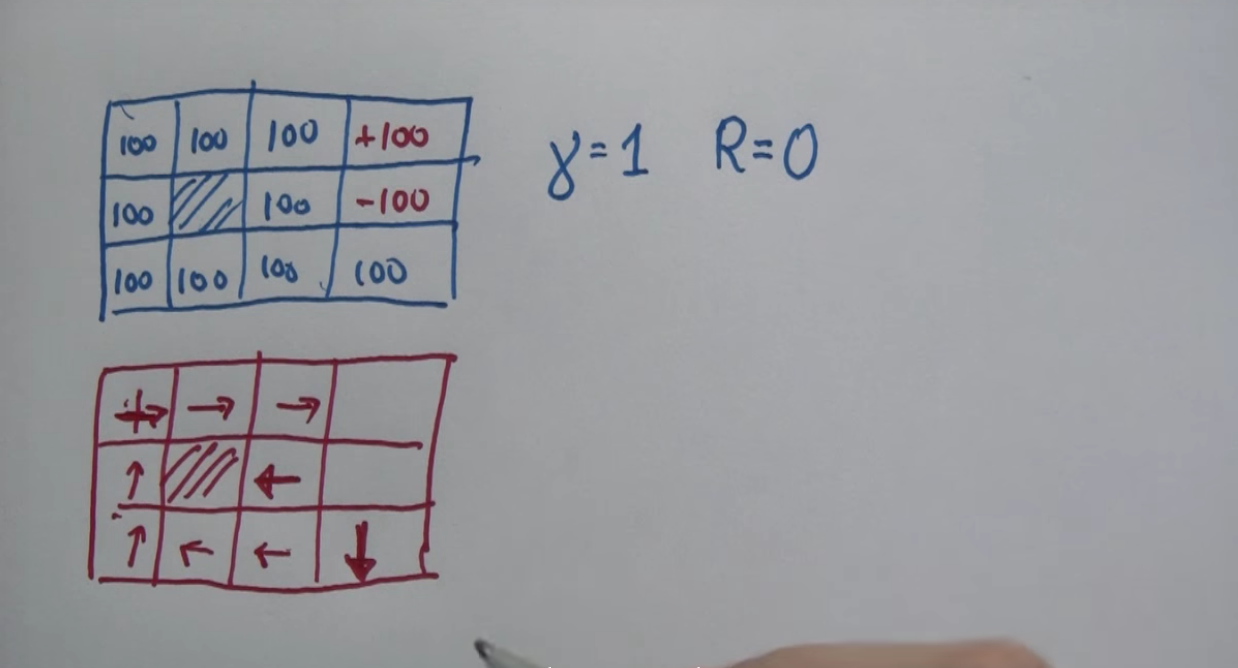

Quiz: Deterministic Question 1¶

Quiz: Deterministic Question 2¶

Quiz: Deterministic Question 3¶

Quiz: Stochastic Question 1¶

Quiz: Stochastic Question 2¶

>>> 77 * 0.8 + (0.1 * -100) - 3

48.6

Value Iterations and Policy 1¶

Value Iterations And Policy 2¶

Markov Decision Process Conclusion¶

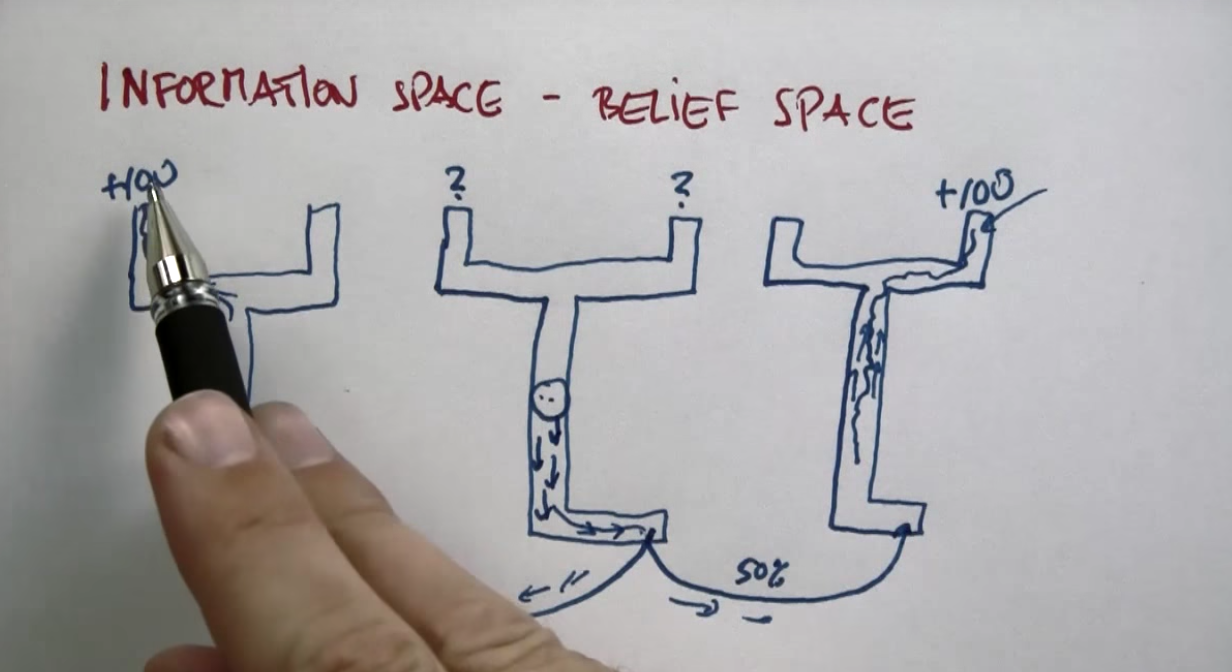

Partial Observability Introduction¶

POMDP Vs MDP¶

POMDP¶

Planning Under Uncertainity Conclusion¶

Further Study

Charles Isbell and Michael Littmann’s ML course

Peter Norvig and Sebastian Thrun’s AI course: